This is the most comprehensive list of DNS best practices and tips on the planet.

In this guide, I’ll share my best practices for DNS security, design, performance, and much more.

Table of contents:

- Have at least Two Internal DNS servers

- Use Active Directory Integrated Zones

- Best DNS Order on Domain Controllers

- Domain-joined Computers Should Only Use Internal DNS Servers

- Point Clients to The Closest DNS Server

- Configure Aging and Scavenging of DNS records

- Setup PTR Records

- Root Hints vs Forwarding (Which one is the best)

- Enable Debug Logging

- Use CNAME Records for Alias (Instead of A Record)

- DNS Best Practice Analyzer

- Bones: DNS Security Tips

Warning: I do not recommend making changes to critical services like DNS without testing and getting approval from your organization. You should be following a change management process for these types of changes.

Have at least Two Internal DNS servers

In small to large environments, you should have at least two DNS servers for redundancy. DNS and Active Directory are critical services, if they fail you will have major problems. Having two servers will ensure DNS will still function if the other one fails.

In an Active Directory domain, everything relies on DNS to function correctly. Even browsing the internet and accessing cloud applications relies on DNS.

I’ve experienced a complete domain controller/DNS failure and I’m not joking when I say almost everything stopped working.

In the above diagram, my site has two domain controllers and DNS servers. The clients are configured to use DHCP, the DHCP server will automatically configure the client with a primary and secondary DNS server. If DC1/DNS goes down the client will automatically use its secondary DNS to resolve hostnames. If DC1 went down and there was no internal secondary DNS, the client would be unable to access resources such as email, apps, internet, and so on.

Bottom line: Ensure you have redundancy in place by having multiple DNS/Active Directory servers.

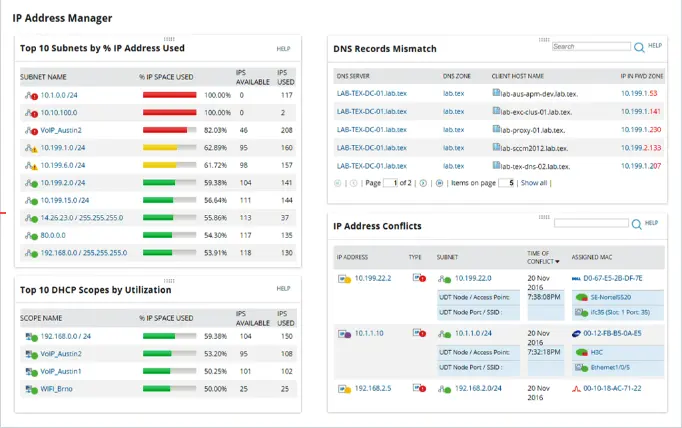

Recommended Tool: Automate the tracking of IP Addresses and scan your subnets by using SolarWinds IP Address Manager Tool. This tool will save you time and eliminate the need to manually update spreadsheets. It will also integrate with your DNS and DHCP servers for centralized management and reporting. Customize the dashboard to display the IP Address details you need such as IP status, MAC address, last response time, location vendor, and more.

Use Active Directory Integrated Zones

To make the deployment of multiple DNS servers easier you should use Active Directory integrated zones. You can only use AD integrated zones if you have DNS configured on your domain controllers.

AD integrated zones have the following advantages:

- Replication: AD integrated zones store data in the AD database as container objects. This allows for the zone information to get automatically replicated to other domain controllers. The zone information is compressed allowing data to be replicated fast and securely to other servers.

- Redundancy: Because the zone information is automatically replicated this prevents a single point of failure for DNS. If one DNS server fails the other server has a full copy of the DNS information and can resolve names for clients.

- Simplicity: AD Integrated zones automatically update without the need to configure zone transfers. This simplifies the configuration while ensuring redundancy is in place.

- Security: If you enable secure dynamic updates, then only authorized clients can update their records in DNS zones. In a nutshell, this means only members of the DNS domain can register themselves with the DNS server. The DNS server denies requests from the computers that are not part of the domain.

Best DNS Order on Domain Controllers

I’ve seen lots of discussion on this topic. What is the best practice for DNS order on domain controllers?

If you do a search on your own you will come across various answers BUT the majority recommends the configuration below.

This is also Microsoft’s recommendation.

- Primary DNS: set to another DC in the site

- Secondary DNS: Set to itself using the loopback address

Let’s look at a real-world example.

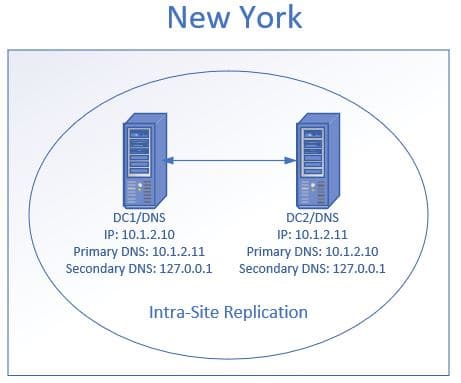

In the above diagram, I have two domain controllers/DNS at the New York site. I have DC1 primary DNS set to its replication partner DC2. Then the secondary DNS is set to its self using the loopback address. Then DC2 primary DNS is set to DC1 and its secondary set to itself using the loopback address.

Microsoft claims this configuration improves performance and increases the availability of DNS servers. If you point the primary DNS to itself first it can cause delays.

Source: https://technet.microsoft.com/en-us/library/ff807362(v=ws.10).aspx

Domain Joined Computers Should Only Use Internal DNS Servers

Your domain joined computers should have both the primary and secondary DNS set to an internal DNS server. External DNS servers cannot resolve internal hostnames so this could result in connectivity issues and prevent the computer from accessing internal resources.

Let’s look at an example of why this is a bad setup.

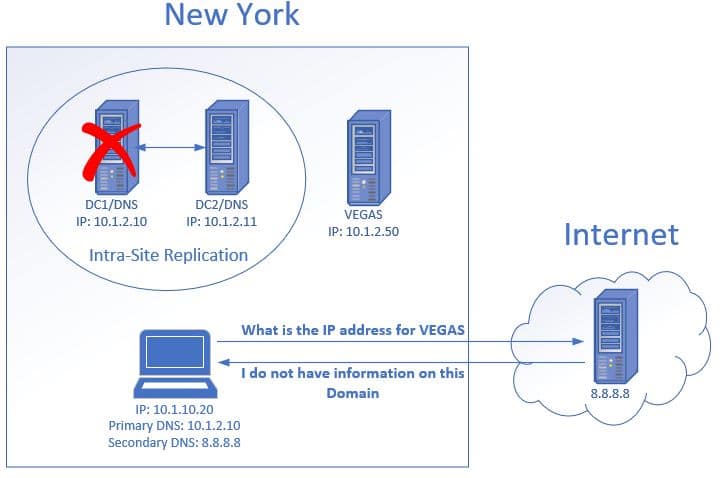

- The client makes a request to an internal server called VEGAS.

- The client decides to contact its secondary DNS server which is 8.8.8.8. It asks the server what the IP address is for the host VEGAS.

- The external DNS knows nothing about this host, therefore, it cannot provide the IP address.

- This results in the client being unable to access the VEGAS file server.

Typically if the primary DNS server is available it will be used first but it may be unresponsive which can result in using the secondary DNS. It may take a reboot of the computer for it to switch back to the primary DNS, this can result in frustrated users and calls to helpdesk.

The recommended solution is to have two internal DNS servers and always point clients to them rather than an external server.

Point Clients to the Closest DNS Server

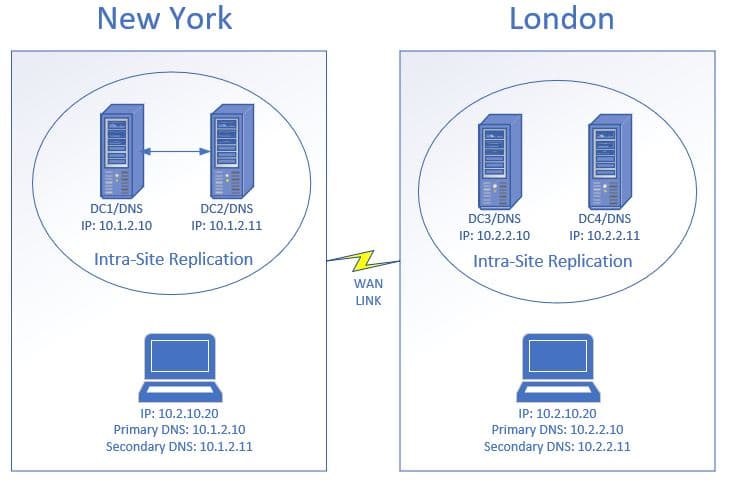

This will minimize traffic across WAN links and provide faster DNS queries to clients.

In the diagram above the client computers are configured to use the DNS servers that are at their site. If the client in New York was incorrectly configured to use the DNS servers in London this would result in slow DNS performance. This would affect the user’s apps, internet access, and so on. I promise you users will be complaining about how slow everything is.

The best way to automatically configure the right DNS servers is by using DHCP. You should have different DHCP scopes setup for each site that includes the primary and secondary DNS servers for that site.

Configure Aging and Scavenging of DNS Records

DNS aging and scavenging allow for the automatic removal of old unused DNS records. This is a two-part process:

Aging: Newly created DNS records get a timestamp applied.

Scavenging: Removes DNS records that have an outdated timestamp based on the time configured.

Why is this needed?

There will be times when computers register multiple DNS entries with different IP addresses. This can be caused by computers moving around to different locations, computers being re-imaged, computers being dropped and added back to the domain.

Having multiple DNS entries will cause name resolution problems which result in connectivity issues. DNS aging and scavenging will resolve this by automatically deleting the DNS record that is not in use.

Aging and Scavenging only apply to DNS resource records that are added dynamically.

Resources:

How to Configure DNS Aging and Scavenging (Cleanup Stale DNS Records)

Setup PTR Records for DNS Zones

PTR records resolve an IP address to a hostname. Unless you are running your own mail server PTR records may not be required.

But… they are extremely helpful for troubleshooting and increasing security.

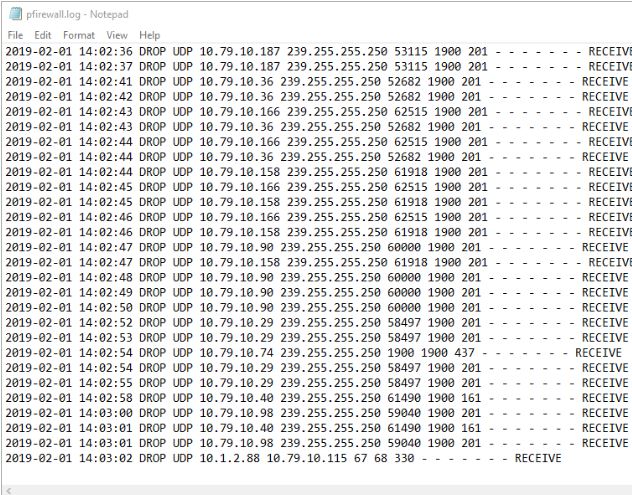

Some systems like firewalls, routers, and switches only log an IP address. Take for example the windows firewall logs.

In this example, helpdesk was troubleshooting a printer issue and thought 10.1.2.88 was a printer being blocked by the firewall. Because I have PTR records setup I was able to quickly look it up using the nslookup command.

10.1.2.88 resolves to nodaway.ad.activedirectorypro.com, I know this is a server and not a printer. If I didn’t have a PTR record setup I would have been digging through inventory trying to find more information about this IP.

There is really no reason not to setup PTR records, it’s easy to setup and causes no additional resources on the server. See my complete guide on setting up reverse lookup zones and ptr records.

Additional Resources:

Root Hints vs DNS Forwarders (Which one is the best)

By default, Windows DNS servers are configured to use root hint servers for external lookups. Another option for external lookups is to use forwarders.

Basically, both options are ways to resolve hostnames that your internal servers cannot resolve.

So which one is the best?

Through my own experience and research, it really comes down to personal preference.

Here are some general guidelines they will help you decide:

- Use root hints if your main concern is reliability (windows default)

- Forwarders might provide faster DNS lookups. You can use benchmarking tools to test lookup response times, link included in the resource section.

- Forwarders can also provide security enhancements (more on this below)

- Forwarders must be configured manually on each DC

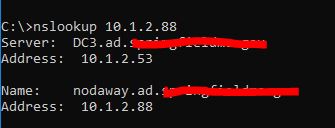

For years I used the default setting (root hints) then I was introduced to Quad9 at a security conference. Quad9 is a free, recursive, anycast DNS platform that provides end users robust security protections, high-performance, and privacy. In a nutshell, Quad9 checks the DNS lookup against a list of bad domains, if the client makes a request to a domain on the list that request is dropped.

I’ve used this service for over a year now and I’ve had zero issues. Since security has been a big concern for me it was my personal preference to switch to Quad9 forwarders from root hints. It is providing fast and reliable lookups with the added bonus of security.

Quad9 does not provide any reporting or analytics. The blocked requests are logged in the Windows Server DNS debug logs, so make sure you read the next section on how to enable it. The drops will be recorded with NXDomain so you could build a report by looking for that in the logs.

Additional Resources:

OpenDNS – is another company that offers this service, it has a high cost but includes additional features and reporting.

How Quad9 Works – This page shows how to setup Quad9 on an individual computer, if you have your own DNS servers DO NOT DO THIS. You will want to use your DNS server and add quad9 as a forwarder. This page provides some additional details and is the main reason why I included it. You could use these steps for your home computer or devices that just need internet access.

DNS Benchmark tool – Free tool that allows you to test the response times of any nameservers. This may help you determine if you want to stick with root hints or use forwarders.

Enable DNS Debug Logging

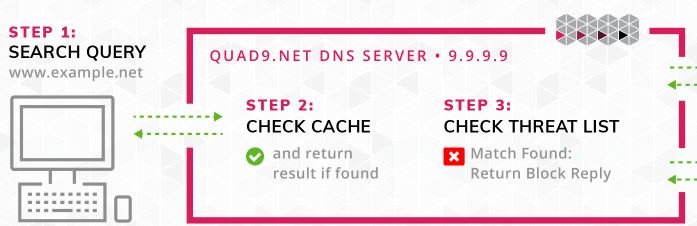

DNS debug logs can be used to track down problems with DNS queries, updates, and other DNS errors. It can also be used to track client activity.

With logging tools like splunk you can create reports on top domains, top clients and find potential malicious network traffic.

Microsoft has a log parser tool that generates the output below:

You should be able to pull the debug log into any logging tool or script to create your own reports.

How to Enable DNS Debug Logs

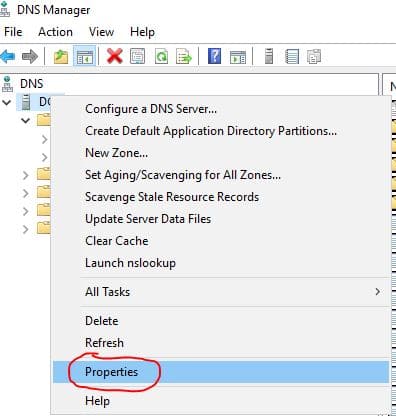

Step 1: On the DNS console right click your DNS server and select properties

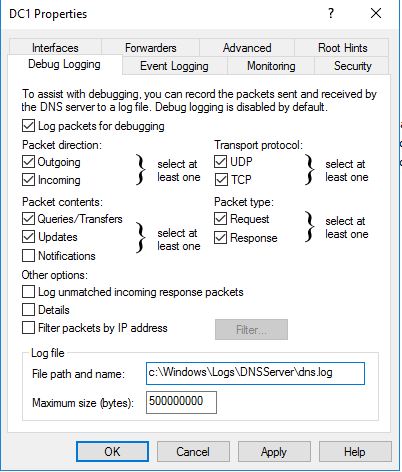

Step 2: Click on the Debug Logging Tab

Change the default path and max size, if needed.

Additional Resources:

Parsing DNS server log to track active clients

Use CNAME Record for Alias (Instead of A Record)

- A record maps a name to an IP address.

- CNAME record maps a name to another name.

If you use A records to created aliases you will end up with multiple records, over time this will become a big mess. If you have PTR records configured this will also create additional records in that zone which will add to the mess and create bigger problems.

If you need to create an alias it’s better to use CNAME records, this will be easier to manage and prevent multiple DNS records from being creating.

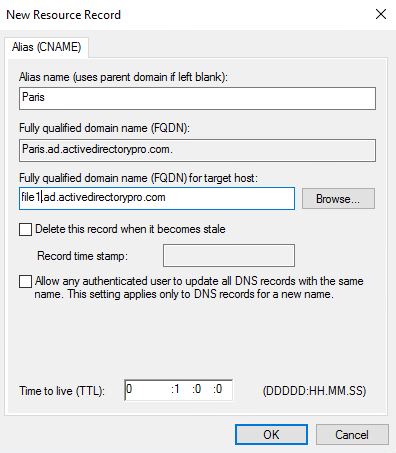

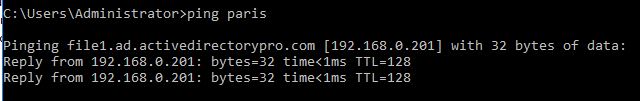

How to Create an Alias CNAME record

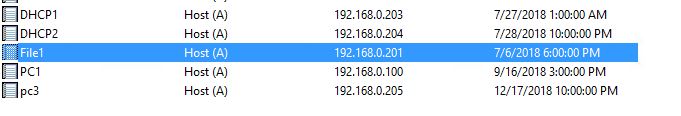

I have an A Record setup for my file server called file1 that resolves to IP 192.168.0.201

Our Dev team wants to rename the server to Paris to make it more user friendly. Instead of re-naming the server I’ll just create a CNAME record.

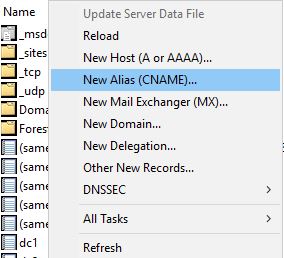

Right click in the zone and click on New Alias (CNAME)

For Alias name, I’ll enter Paris

The alias name resolves to file1 so I add that to the target host box:

Click OK and you’re done!

Now I can access Paris by hostname which resolves to file1

Easy Right?

This keeps DNS clean and helps prevent DNS lookup issues.

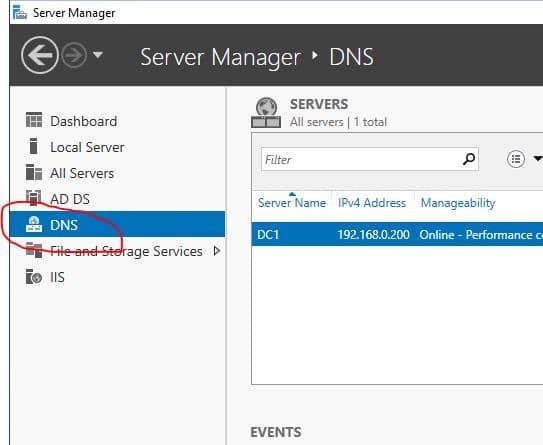

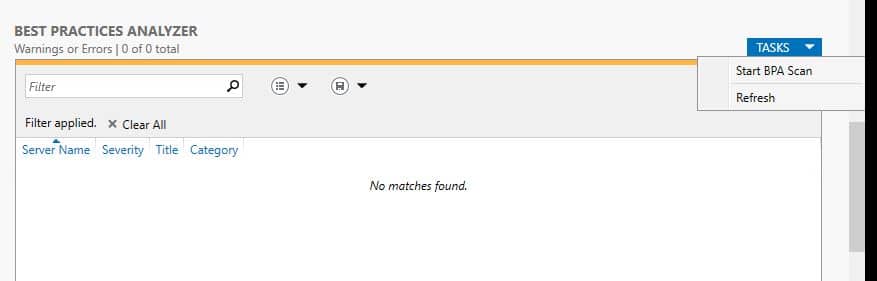

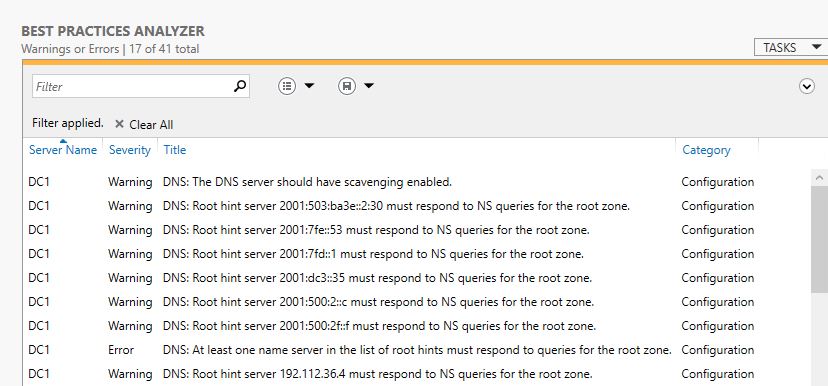

Use DNS Best Practice Analyzer

The Microsoft best practice analyzer is a tool that scans server roles to check your configuration against Microsoft guidelines. It is a quick way to troubleshoot and spot potential problems configuration issues.

The BPA can be ran using the GUI or PowerShell, instructions for both are below.

How To Run BPA DNS Using The GUI

Open Server Manager, then click DNS

Now scroll down to the Best Practices Analyzer section, click tasks then select “Start BPA Scan”

Once the scan completes the results will be displayed.

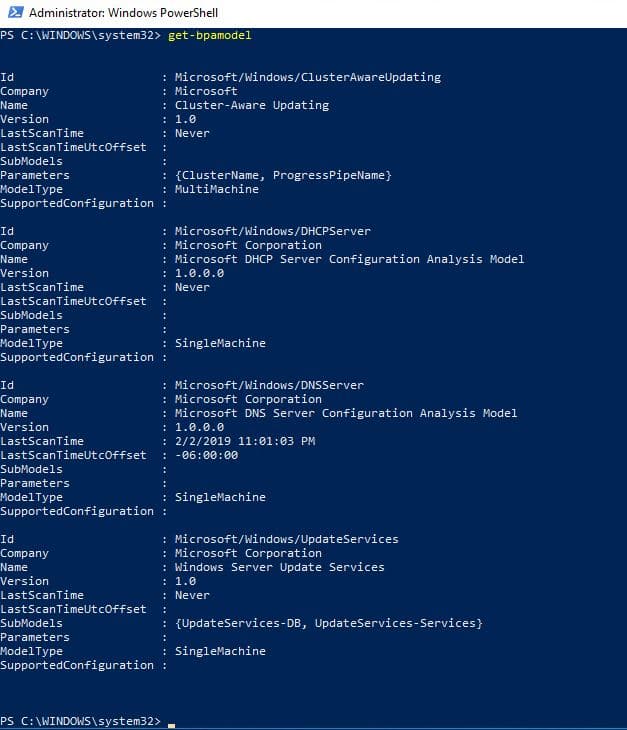

How To Run BPA DNS Using The PowerShell

You will first need the ID of the role. Run this command to get the ID

Get-BPaModel

I can the ID for DNS is Microsoft/Windows/DNSServer. I take that ID and use this command to run the BPA for DNS.

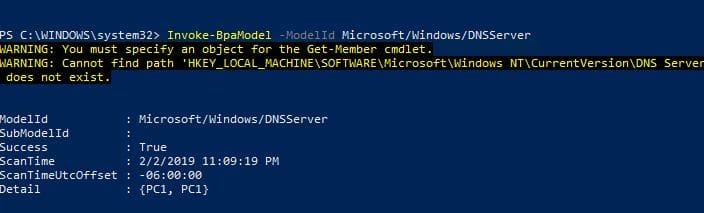

Invoke-BPAModel “Microsoft/Windows/DNSSerer”

You may get some errors, this is normal

The above command only runs the analyzer it does not automatically display the results.

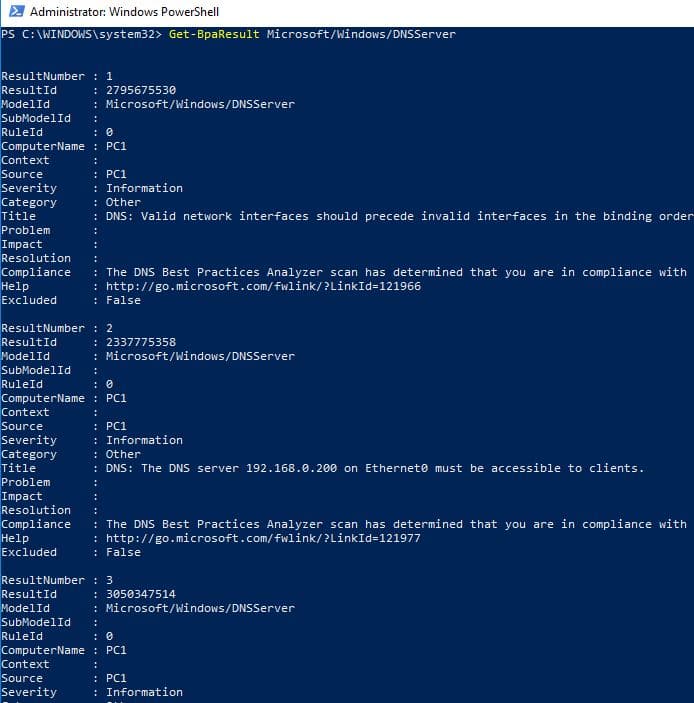

To display the results run this command:

Get-BpaResult Microsoft/Windows/DNSServer

Bonus: DNS Security Tips

I think we can all agree that DNS is an important service. How would anything function without? Now let’s look at a few ways we can secure this service, some of these features are enabled by default on Windows servers.

- Filter DNS Requests (Block Bad Domains)

- Secure DNS forwarders

- DNS Cache Locking

- DNS Socket Pool

- DNSSecFilter DNS Requests (Block bad domains)

Filter DNS Requests (Block Bad Domains)

One of the best ways to prevent viruses, spyware, and other malicious traffic is to block the traffic before it even hits your network.

This can be done by filtering DNS traffic through a security appliance that checks the domain name against a list of bad domains. If the domain is on the list the traffic will be dropped preventing any further communication between the bad domain and client. This is a common feature on next generation firewalls, IPS systems (Intrusion Prevention System), and other security appliances.

I’ve been using a Cisco FirePower firewall that provides this service. Cisco provides a feed (list of bad domains) that is automatically updated on a regular basis. In addition, I can add additional feeds or manually add bad domains to the list. I’ve seen a huge decrease in viruses and ransomware type threats since I’ve been filtering DNS requests. I’ve been amazed at how much bad traffic this detects and blocks, surprisingly very little false positives!

Additional Resources:

Cisco Next Generation Firewall official site

https://www.cisco.com/c/en/us/products/security/firewalls/index.html

Paloalto – Another popular firewall/IPS system

https://www.paloaltonetworks.com/products/secure-the-network/next-generation-firewall

Secure DNS Forwarders

Secure DNS forwarders are another way to filter and block DNS queries.

In addition to blocking malicious domains, some forwarding services offer web content filtering. This allows you to block requests based on a category like adult content, games, drugs and so on. One big advantage this has over an on-premise appliance like a firewall is this can provide protection to devices when they are off the network. It may require a client be installed on the device but it would direct all DNS traffic through the secure DNS forwarder if the device was on the internal or external network.

List of DNS Forwarding Filters:

DNS Cache Locking

DNS cache locking allows you to control when the DNS cache can be overwritten.

When a DNS server performs a lookup for a client, it stores that lookup in the cache for a period of time. This allows the DNS server to respond faster to the same lookups at a later time. If I went to espn.com the DNS server would cache that lookup, so if anyone went to it at a later time it would already be cached allowing for a faster lookup.

One type of attack is poising the cache lookup with false records. For example, we have espn.com in the cache, an attacker could alter this record to redirect to a malicious site. The next time someone went to espn.com it would send them to the malicious site.

DNS cache locking blocks records in the cache from being changed. Windows Server 2016 has this featured turn on by default.

Additional Resources

https://nedimmehic.org/2017/04/25/how-to-deploy-and-configure-dns-2016-part6/

DNS Socket Pool

DNS Socket pool allows the DNS server to use source port randomization for DNS lookups. By using randomized ports the DNS server will randomly pick a source port from a pool of available sockets. Instead of using the same port over and over it will pick a random port from the pool, this makes it difficult for the attacker to guess the source port of a DNS query.

This is also enabled by default on Windows server 2016

Additional Resources

Microsoft Configure the Socket Pool

DNSSEC

DNSSEC adds a layer of security that allows the client to validate the DNS response. This validation process helps prevent DNS spoofing and cache poising.

DNSSec works by using digital signatures to validate the responses are authentic. When a client performs a DNS query the DNS server will attach a digital signature to the response, this allows the client to validate the response and prove it was not tampered with.

Additional Resources:

Brilliant!

the server forwarders cannot be updated.

the ip address is invalid

Any one know the clear reason for this error….

Hi Robert,

I have a question, when you enable Aging/Scavenging, You need to create for all servers static dns?

I think not because we permite secure dns update from ad integrated zones.

Hi Robert,

I am thankful for your concise article.

My questions are around using AD Integrated Primary DNS zones on DCs and Secondary DNS zones on domain joined servers for load balancing and the rplication between all servers and zones.

AD Integrated Primary zones replicate using AD.

Secondary type zones need zone transfers and notifications to replicate.

What is the recommended configuration of the Secondary DNS zone and ideal replication strategy?

Should each Secondary DNS zone server have a zone NS server record?

Should each Secondary DNS zone server instance list all Primary DNS zone servers as masters?

Should all of the Primary DNS zone servers enable zone transfers to all NS servers or specific listed Secondaries?

Should all of the Primary DNS zone servers notify all NS servers or specific listed secondaries?

When there are multiple sites with WAN links between them do any of the above recommendations change?

Is there an ideal hierachy method of DNS replication for zones that have Primary and Secondary DNS servers?

I realise that Secondary Zone types are read only and that the majority of client DNS traffic is forwarded to external DNS servers (or cached). Should I use Secondary zone types on load balancing servers or rather additional Primary zone DNS servers for simplicity of replication?

I appreciate your time in responding.

Very informative.

Question:

If you have (2) IP Addresses in Forwarders, is there anything to be gained by having the same (2) IP Addresses in Conditional Forwarders?

If there is nothing to be gained, should you use Forwarders or Conditional Forwarders?

Thank you for your insight …

Use Forwarders by using the root hints or specify DNS servers, do not put the IP addresses in both.

Conditional forwarders are used to resolve names to specific domains by specifying the domain and the IP of its DNS servers. For example, if you have a trust relationship with another domain you could use conditional forwarders to tell DNS where the authoritative server is for that domain.

Thank you, Robert.

Just so I understand:

1. I remove the (2) IP Addresses from SERVERx Properties – Forwarders

2. I uncheck “Use root hints if no forwarders are available”.

3. I place the (2) IP Addresses above in Conditional Forwarders for the ISP domain.net.

Alternatively, I don’t use Conditional Forwarders.

On a totally different topic, I’ve recently observed odd settings in Windows Server 2012 R2 (SERVERx) DNS.

I initially setup SERVERx with all the appropriate DNS settings (Forward…Reverse…Conditional…) w/ no Trust Points and the DNS system setup Global Logs.

Now I see that SERVERx.Domain.local has appeared under DNS without me doing anything deliberate.

I examined all the DNS settings under this DNS entry and everything looks to be a duplicate of the SERVERx entries.

My other Windows Server 2019 Standard (SERVERy) only shows DNS settings for SERVERy.

Is it safe for me to delete the duplicate entry SERVERx.Domain.local or would I be messing up DNS?

I’m not sure of your setup or exactly what you are trying to accomplish.

1. Use forwarders to resolve external domain names. You can do this by specifying the servers or by using the root hints. If you want your DNS server to resolve external names then you need to use forwarders.

2. Conditional forwarders are for specific use cases like specifying the DNS servers for a specific domain.

I think I’ve got it now.

In resolving external domain names, I guess there’s no point in having the same IP addresses in Forwarders and Conditional Forwarders (which are the addresses for the ISP’s Name Servers).

Any thoughts on my question regarding the duplicate SERVERx and SERVERx.Domain.local entries?

I learned a lot from this, thank you!

You are welcome.

Excellent write up! I was wondering if there was a best practice regarding AD joined servers and DNS entries. Looking at the possibility of a static entry, vs a DHCP reservation, vs a dynamic DNS registration, especially with regards to scavenging.

I have run into multiple locations where scavenging is not configured but with a strong resistance due to poor DNS maintenance. Any thoughts would be appreciated!

Thanks!

Wonderful information.

I have a configuration that is AD Domain at the main office with 20 small remote workgroup locations ( 1 each printer, computer, time clock, access control and 2 Wireless access points) that are using VPN back to the main office. Should the DNS configuration in the remote router DHCP indicate the main office DNSs (2 each) or use should they be configured for the ISP DNS servers. Another possible configuration is to forward the DHCP requests to the main office DHCP server and configure for main office DNSs?

If the clients are domain joined and use internal resources I would point them to the internal DNS servers. I would use the remote router as a DHCP server to auto assign the IP settings to the clients.

I’ve used this setup many times for remote locations that are configured with a site-to-site VPN.

hello,

I have a questions please, if we suspend a dns zone, what will be the effect , or impact on this ?

The zones will be backedup and will be transfered to a new DNS servers. So wewill remove the zone because they will be manager by other primary DNS servers (not DCs)

I have installed new additional domain controller and in DNS management it shows only netbios name not the full FQDN,which cause replication issues , even I tried to change in dns management console , automatically it get reverted to the old net bios name . Please support

Hello.

Thank you for the great site.

how will you set the DNS on the network card / IPv 4 for DNS? if the Server is part of the Forest and you manage just the child domains?

Thank you

Set the preferred to another DC in the same site (if it’s running DNS). Then set alternate DNS to loopback address 127.0.0.1

Great article, but the multi-site and Cross DNS part got me thinking…is it necessary then to have multiple DC’s pointing to each other for DNS as it was first explained or does this change this need?

Do I need to have multiple DC’s in each site?

I’m asking because I’ve recently started working on changing out my older DC’s and stumbled across these topics elsewhere, so now I’m looking more into it.

I currently have 3 DC’s in 3 sites. HQ office, Brach office, and AWS for the servers there. All client computers (desktops and laptops) are located in either HQ or Branch office only. Root DC is at HQ , holds all FSMO roles and is 2012 R2. Branch office and AWS DC’s are 2019.

Every DC is configured to point to itself and no secondary with the exception of the newest one I just added in AWS…by default this one put itself as primary and our HQ as secondary.

DNS has been working this way for years with no issues. So, now I’m wondering do I need to change my DNS as described earlier (using cross method) or leave as is? and do I need to add secondary DNS servers to each site?

It is still recommended to point 1st to another DNS server in the same site and 2nd to the loopback address. With that said I’ve seen many DCs point to itself with no issues. I’d say if you are not experiencing any issue then leave it as is.

Regarding multiple DC’s in each site. I would definitely have two at your HQ site but the branch offices it really depends. If you are hosting a lot of local resources (print server, file server, other application servers) that depend on AD then yes I would go with two. If most resources are in the cloud then I would stick with 1.

Thanks, this lines up with some of the other feedback I’ve received regarding this topic on other sites.

I’ll be putting in a 2nd DC in our main site and will leave the other two as is.

Great article -thanks a lot!

My question – on my Windows 2019 domain controllers – which are DNS-servers as well, the first entry on the DNS client side is pointing to ::1 (IPv6 loopback).

May that be a problem? – So that they are querying themselves first – instead of querying another DC/DNS server?

It is not what Microsoft recommends but a lot of people configure DCs this way and experience no issue.

Here is the article I’m referring to.

https://docs.microsoft.com/en-us/previous-versions/windows/it-pro/windows-server-2008-R2-and-2008/ff807362(v=ws.10)?redirectedfrom=MSDN

I used to be a DNS administrator for a large corporation. This write-up seems to cover the main topics about DNS very well.

A hoy,

Just read through your doco. In the first table in “Point Clients to the Closest DNS Server”, the client IP addresses are the same. I assume thats an error and shouldn’t be like that.

Cheers

Oops yea that is an error. The client IP address should be in the same subnet as the site.

Excellently mentioned with detailed information

Thank you for sharing your knowledge with the whole world.

Just a short question regarding DNS order on DCs. I read a lot of times not to use the loopback address. This advice was for Server 2008 R2 and changed over time. Instead you should use the actual IP of the DC. What do you think?

For AD integrated DNS of a Windows forest where Delegation is configured in the root domain for every child domain, does it make sense to limit Zone transfer of the AD Zone to only that domain’s DNS servers or would i be best to allow transfer to them all? -Each domain has 50 to 100 clients. -All forest Name Servers appear in the Name Server list for the AD Forward Lookup zone. I’d like to know to your comments on this please 😉 …

Wow. Amazing. Thank you. Do you have a Paypal tip button/link that you could add so we could say thanks with a tip? Just an idea.

Thanks for the feedback!

Cross DNS is not necessary since 2003. It was needed to solve “island” problem:

https://docs.microsoft.com/en-us/troubleshoot/windows-server/networking/dns-server-becomes-island

since 2003 it is not needed to have cross DNS settings because Replication uses Site-and-Trusts

cross DNS is valid for pre-2003 Windows Servers only:

https://docs.microsoft.com/en-us/troubleshoot/windows-server/networking/dns-server-becomes-island

What about dynamic updates? What are your thoughts on those?

Thanks

It’s enabled by default and I recommend leaving it enabled unless you have a specific reason to disable it. I find it helpful and have not run into any issues using it.

Wow good write up. Thanks so much for the time you put into this and sharing your highly valued knowledge in this format.

Great article. Thank you very much, it has answered several questions I had.

One last question that I have that wasn’t really touched on is. How is the forwarders configured on each of the DCs? If all of the DNS zones are AD integrated then would you configure each outlying DC to forward to

HQ – 3 DCs

Office1 – 1 DC

Office2 – 2 DC

HQ DCs forwarders to external (Quad9, Google, etc.) then have Office1 and Office2 setup to forward to DC1,2,3 in HQ?

I would forward them all to your external DNS of choice, there is no need to forward them to your HQ DNS server.

Thanks this is a great article.

I’m struggling to find the recommendation of DNS configuration for domain controllers for multiple sites, EG I have two sites with 2 DC’s at each. Should any of the dns be pointing to other dc’s at the remote site?

EG:

SITE 1: DC1, DC2

SITE 2: DC3, DC4

Dns Configurations:

DC1: DC2, DC3, DC4, Self

DC2: DC1, DC3, DC4, Self

DC3: DC4, DC1, DC2, Self

DC4: DC3, DC1, DC2, Self

Or should it just be local DC then self for everything?

Thanks in advance,

Daniel

MS doesn’t have clear documentation on this. This document does mention using the loopback address but not as the first DNS server.

The general consensus is to configure it like so (assuming all the DCs are also DNS servers)

Site 1:

DC1

Primary = DC2

Secondary = loopback address

DC2

Primary = DC1

Secondary = loopback address

I am also very curious about this question

There are 2 domain controllers at site A. The preferred DNS of each domain controller is to write the IP address of the other domain controller as the first choice, and the secondary DNS is 127.0.0.1. I have no doubt about this;

Are the two domain controllers at site B the same configuration? Don’t need to add the domain control address of site A?

Great article and great website. I am in the process of learning Windows Server. Happened on your website yesterday and couldn’t be happier with the content! So helpful!

Thanks Andrew. I’m glad you like it!

Very nice writeup. Thanks for sharing.

Hi! Excelent article! I´m quite lost about configs… Maybe you can give me hint… When I search with nslookup, I´m getting 2 timouts. Looking in debug mode, I found that if search is for host activedirectorypro.com, it will in first instance search for “activedirectorypro.com.com.ar”, that obviously fails. After that failure it searchs for “activedirectorypro.com”, that is resolved correctly. Why is this happening? Where should I look?

Thanks in advance…

What is the recommend DNS configuration for a single DC at a second site?

Is it best to point the second sites DC at itself first, with secondary DNS pointing back to the PDC @ head office? Or is it recommended to point at the head office PDC first with itself being the 2nd DNS server?

Best Regards,

Robert, Love all the articles that you’ve written! Very clear, thorough and understandable. I do have a couple of questions though. I just ran the BPA on a new domain that i just created (I’m migrating our current domain to it) and received 3 errors and serveral warnings. Here they are:

1. DNS: DNS serves on ethernet should include the loopback address, but not as the first entry…I double checked and both this DNS server and my alternate DNS servers’ nic adapters are setup as you suggested…Primary is set to the other dns servers ip, and the alternate is set to the loopback address (127.0.0.1)

2. DNS: Zone TrustAnchors secondary servers must respond to queries for the zone… I have no idea about this one!

3. DNS: At least one name server in the list of root hints must respond to queries for the root zone… I have no idea about this one either!

There are serveral Warnings related to TrustAnchors secondary servers must respond to queries for the zone and

Root hint server 2001:dc3::34 must respond to NS queries for the root zone. (looks to me like a ip6 issue?)

Thanks for all your help!

Rob

This is great, thanks for the article. One question, what do you think the MS BP is when talking about infoblox and the root domain in a forest. (Allow DC’s to host MSDCS and then use conditional forwarders hosting all other records in infoblox, or let infoblox host all the records) If you can point me to an MS KB on this as well, that would be great. thank you!

Very nice article! I am have a question:

First a little background:

4 DCs with DNS (trying to decommission several DCs) – Internal DNS

2 Externals DNS Servers

Right now, Dc1 and DC2 are the dns servers being pushed out via DHCP on Dc3. Dc1 has 8.8.8.8, DC1 ip and Dc2 Ip for DNs servers under IP V4 settings.

Dc2 has the same thing,

Dc3 has dc1,2,3

dc4 has dc1,dc2

So Should I remove the external entries from the serverS? and add all ips to each DC.

Second,

My external DNS Servers have Dc1 and 8.8.8.8 and ExDns1 and ExDn2 for both. Is this proper?

Thank you in advance

Are the DCs all in the same site? Do you have a requirement/need to keep the external DNS servers?

Right now, Dc1 and DC2 are the dns servers being pushed out via DHCP on Dc3. Not sure I follow this? Are DC1 and DC2 set to get its IP information from DHCP? Definitely need to all DCs IP info to static if that is the case.

So helpful!

Fantastic check list for DNS, thankyou.

What is your recommendation for integrating a firewall into the DNS mix?

We have 2 DCs running DNS behind a Sophos XG which is also a DNS server and then 2 remote sites connected via SSLVPN trhough their own Sophos XG (no on-site DC).

Should the remote sites – which get DHCP from the Sophos XG – have the firewall as DNS 1 and the DCs as DNS2/3 or the other way round?

Thanks for taking the time to write this, it helped a lot!

We have a split DNS setup that I inherited with an internal and external DNS servers that both resolve OurName.com. I want to block internal look ups for the root domain (or our entire domain) from resolving to the external DNS because our internal file shares are \\OurName.com\share_path but our marketing department wants the root to point to the external web server (for obvious reasons). What is the best way to restrict root hints or forwarders from going external sources for our domain name?

Just make sure all internal clients are using the internal DNS servers and it will work, assuming a resource record is creating in the lookup zone (see below). Your clients should not have the external DNS server configured on their DNS settings, your internal DNS server should be configured to use your external as a forwarder or use the root hints servers.

You should have a resource record in the forward lookup zone for the file server

Lookup Zone: OurName.com

Record type: A

Name: fileserver

IP: 172.20.252.11

Now your clients can access the file share using the name fileserver.ourname.com

Great article and equally helpful comments.

I came across a condition where we had a DNS zone setup only externally for a production website, MX entries for mail routing, etc. (is. ABC.com). Recently, the request was to setup an internal DNS zone for a UAT website in lieu of mocking up a tester’s host file. (uat.abc.com)

I had created a new DNS zone for “abc.com” and proceeded to add an A-record for only “uat.abc.com”. When performing nslookup for “abc.com”, nothing came back. Only a return for an nslookup for “uat.abc.com”.

What was missed here? Root hints gone awry? Or…

Should I setup an internal DNS zone for only uat.abc.com with a parent A-record? Or…

Should I all entries A-records from the external DNS zone into my internal DNS zone?

The recommended setup is to create an internal zone for uat.abc.com and leave the external zone for abc.com as is. This is how I have my sites and Active Directory environment configured. My internal AD is ad.activedirectorypro.com and my website is hosted externally with a separate external DNS zone.

You are the best! Thank you for sharing Sir! Good Job!!!

Everywhere it says PRT it should say PTR instead.

oops. I have fixed.

Very nice article. I would like to see the part “Best DNS Order on Domain Controllers” extended with DNS ordering on DC servers in a multi site scenario as well. Assuming I have 10 sites with 2-3 DC servers each.

Raphael Ferreira – agreed! Thank you, so much. Share!

Another DNS Security tip would be to restrict DNS traffic at the firewall to only the allowed DNS resolvers (e.g. Quad9, OpenDNS, etc.) so other clients & devices don’t use their own DNS servers.

Good tip, thank zack.

Its really helpful for beginners like me. Thanks for putting such efforts and making an exhaustive informative article.

This is the most informative, useful , no-nonsense article on DNS I have found out there. THANKS!

That is what I was going for. Thanks for the feedback.

100% accurate, great article!

🙂